Lab 4

Introduction

In class we have been learning about various image functions and this lab was meant to give the class a first-hand experience in how these functions work. The idea is that after this lab is completed, we will have gained some skills in image processing, enhancing images for visual interpretation, and have the ability to delineate any study area from a larger satellite image scene.

This lab has five major challenges that are meant to give us better skills with image functions. The five activities for this lab are as follows; delineate a study area from a larger satellite image scene, demonstrate how spatial resolution of images can be optimized for visual interpretation purposes, introduce some radiometric enhancement techniques in optical images, linking a satellite image to Google Earth which can be a source of ancillary information, and finally to work with several different methods of resampling satellite images.

Methods

Section 1

The idea behind this section was subsetting an image with the use of an Inquire box. Image Subset is the delineation of a region of interest from a larger satellite image scene. The following will be a step-by-step directions of how I came to have my subsetted image of the UWEC area. To start I opened up the image eau_claire_2011.img. After activating the Raster tools I clicked on the Inquire Box. This process can be seen below in Figure 1.

|

| Figure 1: This is a screenshot of the process behind turning on the Inquire Box. Image created by: Cyril Wilson |

This created a small white outlined box that popped up onto my screen. I then repositioned this over the UWEC area. Next click on the tool Subset & Chip and Create Subset Image. This process can be seen below in Figure 2.

|

| Figure 2: This is a screenshot of the process behind selecting the Create Subset Image tool. Image created by: Cyril Wilson |

This procedure will automatically input the image file on the image viewer. After the subset image is created I then saved the image in a folder I created, naming it Image_Subset. In the Output File I then navigated to the folder I just created and entered the name eau_claire_2011sb_ib.img. Then click the, From Inquire Box, button. After clicking ok this created the subset image of the UWEC area and placed it into the folder that I created.

Section 2

Section 2

This section deals with subsetting an image with the use of an area of interest shape file. To begin I opened up the eau_claire_2011.img image into my viewer once again. Then I brought a shapefile of Eau Claire and Chippewa counties, called ec_cpw_cts.shp, into the viewer to subset those counties from the original image. With the shapefile on the viewer I then used this to create the area of interest (aoi) file. While holding down the shift key I then selected the two counties, which turned them yellow. I then clicked Home and selected paste from selected object. This process can be seen below in Figure 3.

This process created an aoi file. Next I had to save this aoi file to my created folder. I clicked File, Save As - AOI Layer As. I then navigated to my folder and names the layer ec_cpw_cts.aoi. I then clicked on the Subset & Chip button again, bringing up the Subset window. I clicked on the output folder and navigated to my created folder. I named the output image ec_cpw_2011sb_ai.img. The subsetted aoi image was now saved into my created folder.

Section 3

This section deals with the idea of Image Fusion. In this process, I created a higher spatial resolution image from a coarse resolution image for visual interpretation purposes. I began by opening up the image ec_cpw_200.img. I then opened a second viewer and opened the image ec_cpw_200pan.img. I then clicked on Raster to activate the raster tools. I clicked on the Pan Sharpen icon and clicked on Resolution Merge. This process can be seen below in Figure 4.

|

| Figure 3: This is a screenshot of the process of selecting the paste from selected object tool. Image created by: Cyril Wilson |

This process created an aoi file. Next I had to save this aoi file to my created folder. I clicked File, Save As - AOI Layer As. I then navigated to my folder and names the layer ec_cpw_cts.aoi. I then clicked on the Subset & Chip button again, bringing up the Subset window. I clicked on the output folder and navigated to my created folder. I named the output image ec_cpw_2011sb_ai.img. The subsetted aoi image was now saved into my created folder.

Section 3

This section deals with the idea of Image Fusion. In this process, I created a higher spatial resolution image from a coarse resolution image for visual interpretation purposes. I began by opening up the image ec_cpw_200.img. I then opened a second viewer and opened the image ec_cpw_200pan.img. I then clicked on Raster to activate the raster tools. I clicked on the Pan Sharpen icon and clicked on Resolution Merge. This process can be seen below in Figure 4.

|

| Figure 4: This is a screenshot of the process of selecting the Resolution Merge tool. Image was created by: Cyril Wilson |

After this was done a Resolution Merge window appeared. The next 5 steps will be shown below in Figure 5.

Next I brought the pan-sharpened image ec_cpw_200ps.img into the second viewer and compared it with the multispectral image of the same area. To best do this I synced the views.

Section 4

In this section, I am going to perform some preliminary radiometric enhancement techniques to enhance image spectral and radiometric quality. To begin I opened the image eau_claire_2007.img. I clicked on Raster to activate the tools. I then clicked on Radiometric and Haze Reduction, shown below in Figure 6.

Section 4

In this section, I am going to perform some preliminary radiometric enhancement techniques to enhance image spectral and radiometric quality. To begin I opened the image eau_claire_2007.img. I clicked on Raster to activate the tools. I then clicked on Radiometric and Haze Reduction, shown below in Figure 6.

|

| Figure 6: This is a screenshot of the process of selecting the Haze Reduction tool. Image created by: Cyril Wilson |

This process opens up a window. For the input file choose eau_claire_2007.img. For the Output Folder I brought it into my created folder and named the new file ec_2007_haze_r.img. I then opened a second viewer and brought in my newly created image to compare the two.

Section 5

This section is designed to introduce one of the recent developments in Erdas 2001 which was not in any of the previous versions of the software. This procedure can be helpful for collecting training data for image classification provided the classification is for a current image, as Google Earth images are more recent. I began by opening the image eau_claire_2011.img and fitting it to frame. I then clicked on Google Earth and then Connect to Google Earth. I moved the Google Earth viewer to a different monitor so it wouldn't get in the way. Next I had to match the Google Earth viewer to the original viewer. The last two steps can be seen below in Figure 7.

|

| Figure 7: This is a screenshot of the process of connection to Google Earth and then matching the Google Earth viewer to the original Eau Claire image. Image created by: Cyril Wilson |

I could then zoom in on the original image and the Google Earth image would zoom in to the exact same location. I then zoomed in to view the differences in the pixels of the two images.

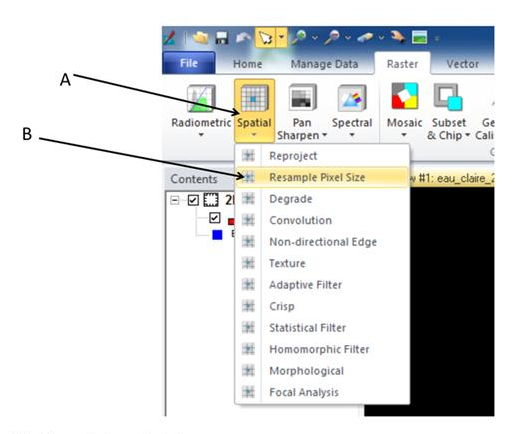

Section 6

This section deals with resampling. This is the process of changing the size of pixels. Resampling can be done to reduce or increase the size of pixels depending on the type of analytical need. First I opened a viewer and opened the image eau_claire_2001.img and fit to frame. I then looked at the images metadata and viewed the pixel size. Under Raster, I clicked on Spatial and then Resample Pixel Size. This process can be seen below in Figure 8.

|

| Figure 8: This is a screenshot of the process of choosing the Resample Pixel Size tool. Image created by: Cyril Wilson |

This resulted in a window popping up. I chose the original image as the input. For the Output File I named it eau_claire_nn.img and placed it in a folder I created. I choose nearest neighbor for the resampling method and changed the pixel size from 30x30 to 20x20, then clicked ok.

I then did this whole process over again but instead of selecting Nearest Neighbor I selected Bilinear Interpolation. I then, one at a time, opened these newly created images into the second viewer and compared them to the original image that I started with.

Results

For the results for each section I either can to upload an image or answer a question or two. I will show you my created images and answered questions for each section below.

Section 1

|

| Figure 9: This is a screenshot of the subset image that I created in the first section of this lab. |

Section 2

|

| Figure 10: This is a screenshot of the subset (aoi) image that I created in the second section of this lab. |

Section 3

Q1: Outline the differences you observed between your input reflective image and your pan-sharpened image.

The first thing I noticed about the differences

in the views was how much more clear the pan sharpened image is compared to the

reflective image. You can see an example of this clearness in the image below,

the colored image being the reflective and the gray scale image being the pan

sharpened. Along with the idea of clearness

the reflective seems to not be as clear because of how pixelated the image is.

The only other thing that really jumped out at me from a difference stand point

was how much easier it is to depict features on the pan sharpened image than it

is to see them on the reflective image.

Section 4

Q2: Outline the differences you observed between your input reflective image and your haze reduction image.

The difference in these two images is miraculous! The haze reduction image is outstandingly clearer than the original reflective image. In the reflective image there were clouds in the bottom right-hand corner and the overall image had a sort of haze over it that made depicting features within the image more difficult. After the haze reduction there is no more haze and the clouds that were in the original image are gone. This image is now suitable for those in the remote sensing field.

Q2: Outline the differences you observed between your input reflective image and your haze reduction image.

The difference in these two images is miraculous! The haze reduction image is outstandingly clearer than the original reflective image. In the reflective image there were clouds in the bottom right-hand corner and the overall image had a sort of haze over it that made depicting features within the image more difficult. After the haze reduction there is no more haze and the clouds that were in the original image are gone. This image is now suitable for those in the remote sensing field.

Section 5

Q3: Can the Google Earth view serve as an Image interpretation key? Why or why not?

Yes, Google Earth can be used as an image interpretation key. The Google Earth image covers area that an image interpreter has the ability to interpret. The resolution on the Google Earth image is actually superior of that of the reflective image, and one could argue that the Google Earth image would actually be a more effective way to interpret as area.

Q3: Can the Google Earth view serve as an Image interpretation key? Why or why not?

Yes, Google Earth can be used as an image interpretation key. The Google Earth image covers area that an image interpreter has the ability to interpret. The resolution on the Google Earth image is actually superior of that of the reflective image, and one could argue that the Google Earth image would actually be a more effective way to interpret as area.

Q4: If so, what type of image interpretation key is it?

The image interpretation key used for the Google Earth image would be a Selective Key.

Section 6

Q5: Is there any difference in the appearance of the original image and the resampled image generated using the nearest neighbor resampling method? If yes or no, explain your observations.

With these two images I’m not really sure that I see much of a difference from the original image to that of the resampled image. I tried zooming in and the images looked very similar. It does appear that there are more pixels, as there should be, but I’m just not seeing that much of a difference. Maybe it’s time for new glasses?

Q6: Is there any difference in the appearance of the original image and the resamples image generated using bilinear interpolation resampling method. If yes or no, explain your observations.

I do notice a difference between the two, I’m not exactly sure if it is a good difference for the bilinear interpolation image though. That image seems to be much more blurry than that of the original image. When zoomed in, you can definitely tell that the bilinear image is has more pixels and that everything blends together better, but the overall image just comes off as blurry.

No comments:

Post a Comment